In this blog post, we will discover the process to deploy a Docker Swarm cluster using Ansible. We will also see how to deploy a service and a stack to this cluster using Ansible.

Pre-requisites

- 4 servers or VMs (see this article to create VMs with Vagrant)

- Ansible installed on your local machine

- Understanding of Ansible concepts

- Understanding of Docker Swarm concepts

What is Ansible ?

Ansible is an open-source automation tool that is used for configuration management, application deployment, and task automation.

The key concept behind Ansible is that it operates in an agentless mode, meaning it does not require any software to be installed on the target hosts, unlike other configuration management tools like Chef and Puppet. Instead, Ansible uses SSH to connect to remote hosts and execute tasks, making it more secure and easier to manage.

Ansible operates on the principle of idempotence, which means that if a task has already been executed, running it again should not make any changes. This ensures that Ansible can be used to perform tasks in a repeatable and predictable manner, without causing unintended consequences.

Another important concept in Ansible is the idea of playbooks, which are YAML files that contain a series of tasks to be executed on a set of hosts. Playbooks provide a way to organize and automate complex tasks, making it easier to manage infrastructure at scale.

Let's start

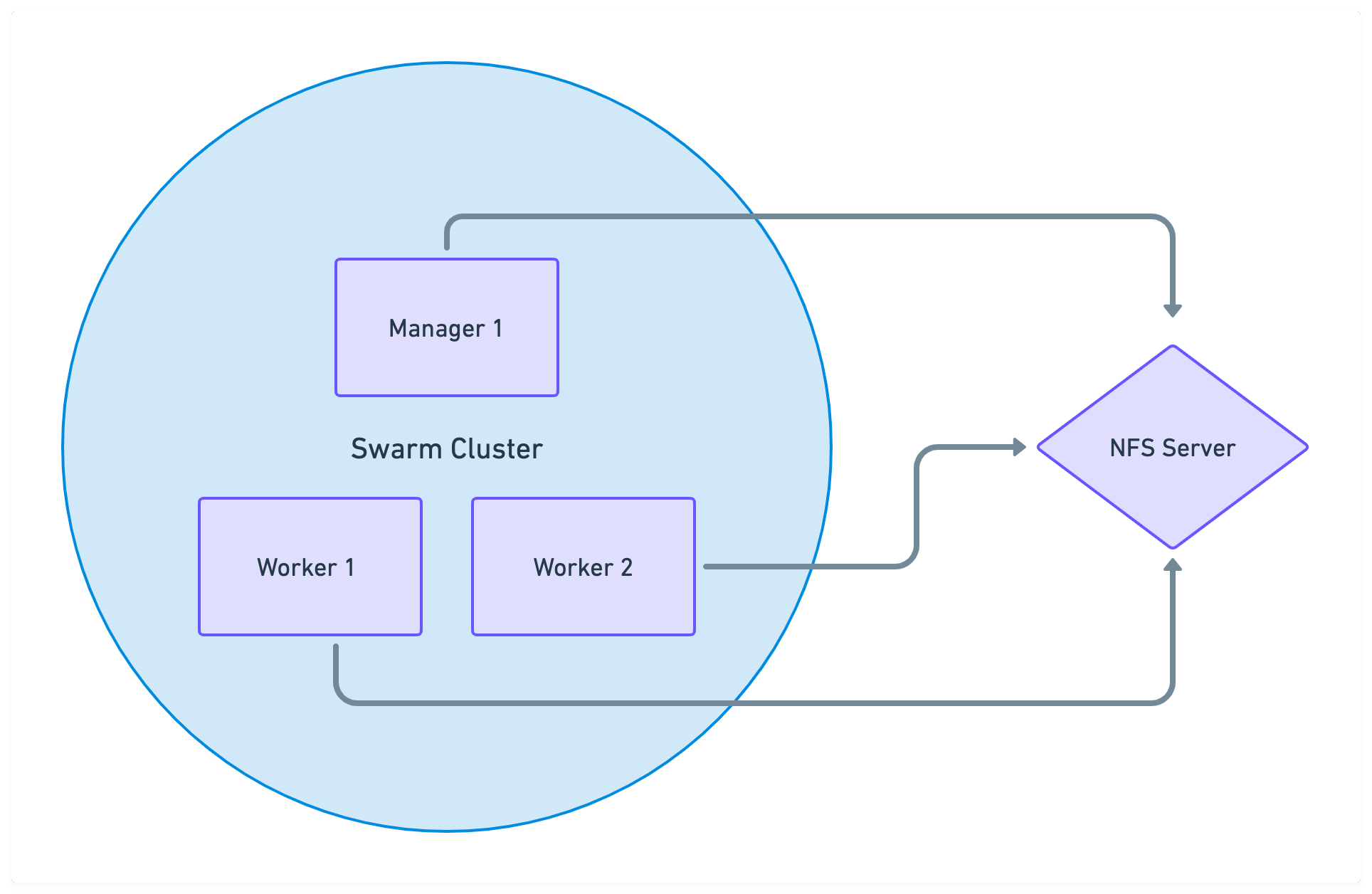

Before starting to create our Docker Swarm cluster, we need to understand the architecture of our cluster.

In this schema, there two key concepts that we need to know :

- We have 1 manager node and 2 worker nodes. In fact, with Docker Swarm, the number of manager nodes should be odd because theses nodes uses the Raft consensus algorithm to manage the cluster.

- The NFS server is used to share the configuration files between the different servers of the cluster. In this way, we can easily deploy services across the cluster.

Create the inventory

The first step is to create the inventory file. This file contains the list of servers that we want to manage with Ansible.

You need to create a file 00_inventory.yml with the following content :

all:

children:

docker:

children:

managers:

hosts:

192.168.56.10:

workers:

hosts:

192.168.56.11:

192.168.56.12:

nfs_server:

hosts:

192.168.56.13:

In this file, we have 3 groups :

docker: This group contains the servers that will be part of the Docker Swarm cluster. In this group, we have 2 subgroups :managers: This group contains the manager nodes of the clusterworkers: This group contains the worker nodes of the cluster

nfs_server: This group contains the server that will be used to share the configuration files between the different servers of the cluster

This file and its content is very important because it will be used by Ansible to know which servers it needs to manage and which tasks it needs to execute on these servers.

Create the NFS server

This step is to create the NFS server. This server will be used to share the configuration files between the different servers of the cluster.

To do this, we need to create a playbook playbook.yml with the following content :

- name: install nfs_server

hosts: nfs_server

become: yes

roles:

- nfs_server

tags:

- nfs_server

In this playbook, we have 3 key concepts :

hosts: This is the group of servers that we want to manage with this playbook. In this case, we want to manage the servers that are part of thenfs_servergroup.become: This is a boolean that indicates if we want to execute the tasks withsudoor not. In this case, we want to execute the tasks withsudo.roles: This is the list of roles that we want to execute. In this case, we want to execute the rolenfs_server.

Now, we need to create the role nfs_server. To do this, we need to create a folder roles and run the command ansible-galaxy init roles/nfs_server to create the role nfs_server.

As a reminder, a role is a way of organizing tasks, files, templates, and other Ansible artifacts in a structured way.

So, in our case, this role will contain the tasks that we need to execute to create the NFS server.

Now, we need to edit the file roles/nfs_server/tasks/main.yml with the following content :

- name: Ensure NFS utilities are installed

apt:

name: nfs-common,nfs-kernel-server

state: present

update_cache: yes

cache_valid_time: 3600

- name: Ensure directories to export exist

file:

path: "/shared/{{ item }}"

state: directory

with_items: "{{ nfs_server_dir_data }}"

- name: Copy exports file.

template:

src: exports.j2

dest: /etc/exports

owner: root

group: root

mode: 0644

notify: __reload_nfs

This file contains 3 tasks :

The first task is to install the NFS utilities on the server. It uses the apt module to install the packages nfs-common and nfs-kernel-server.

The second task is to create the directories that we want to share with the other servers of the cluster. It uses the file module to create the directories.

It uses the variable nfs_server_dir_data to know which directories it needs to create. To define this variable, we need to update the file roles/nfs_server/defaults/main.yml with the following content :

nfs_server_dir_data:

- "services"

- "projects"

With this variable, Ansible will create 2 directories : /shared/services and /shared/projects.

The third task is to copy the file exports.j2 to the server. It uses the template module to copy the file onto the file /etc/exports. To define this file, we need to create the file roles/nfs_server/templates/exports.j2 with the following content :

{% for export in nfs_server_dir_data %}

/shared/{{ export }} 192.168.56.0/24(rw,sync,no_root_squash,no_subtree_check)

{% endfor %}

This file contains the configuration of the NFS server. In this case, we want to share the directories /shared/services and /shared/projects with the other

servers of the cluster. So we use the subnets 192.168.56.0/24 to indicate that we want to share these directories with the servers that have an IP address from

192.168.56.1 to 192.168.56.254.

The last thing that we need to do is to restart the NFS server. To do this, we need to create a handler. A handler is a task that is executed when a notification is sent.

To create this handler, we need to update the file roles/nfs_server/handlers/main.yml with the following content :

- name: __reload_nfs

command: "exportfs -ra"

Now, we can run the playbook playbook.yml with the following command :

ansible-playbook -i 00_inventory.yml -k playbook.yml

So, the NFS server is created. Now, we need to create the Docker Swarm cluster.

Create the Docker Swarm cluster

Now that we have created the NFS server, we can create the Docker Swarm cluster.

To do this, we need to update the playbook playbook.yml with the following content :

- name: install nfs_server

hosts: nfs_server

become: yes

roles:

- nfs_server

tags:

- nfs_server

- name: install swarm cluster

hosts: docker

become: yes

roles:

- docker

- swarm

- nfs_client

- tooling

In this new version of playbook, we will launch a new play named install swarm cluster and this play will manage the servers that are part of the group docker.

It will execute 4 roles :

docker: This role will install Docker on the serversswarm: This role will create the Docker Swarm clusternfs_client: This role will mount the directories that are shared by the NFS servertooling: This role will install some tools that will be useful to manage the Docker Swarm cluster

Now, we need to create the role docker. To do this, run the command ansible-galaxy init roles/docker to create the role.

Then, we need to edit the file roles/docker/tasks/main.yml with the following content :

- name: add gpg key

apt_key:

url: "{{ docker_repo_key}}"

id: "{{ docker_repo_key_id }}"

- name: add docker repo

apt_repository:

repo: "{{ docker_repo }}"

- name: install docker and dependencies

apt:

name: "{{ docker_packages }}"

state: latest

update_cache: yes

cache_valid_time: 3600

with_items: "{{ docker_packages }}"

- name: Add user to docker group

user:

name: vagrant

group: docker

- name: start docker

service:

name: docker

state: started

enabled: yes

This role will install Docker on each server of the cluster and it will add the user vagrant to the group

docker in order to be able to execute the docker command without sudo.

After we ensure that Docker is installed on each server, we need to create the role swarm. To do this, run the command ansible-galaxy init roles/swarm to create the role.

Then, we need to edit the file roles/swarm/tasks/main.yml with the following content :

- name: check/init swarm

docker_swarm:

state: present

# Specific for vagrant environment

advertise_addr: enp0s8:2377

register: __output_swarm

when: inventory_hostname in groups['managers'][0]

- name: install worker

docker_swarm:

state: join

timeout: 60

advertise_addr: enp0s8:2377

join_token: "{{ hostvars[groups['managers'][0]]['__output_swarm']['swarm_facts']['JoinTokens']['Worker'] }}"

remote_addrs: "{{ groups['managers'][0] }}"

when: inventory_hostname in groups['workers']

As you can see, there are 2 tasks in this role.

The first task is to create the Docker Swarm cluster. It uses the docker_swarm module to create the cluster.

The state of the module is present, which means that it will create the cluster if it doesn't exist.

This task is executed only on the first server of the group managers.

We also use register in order to save the output of the module in a variable. This variable will be used by the second task to join the workers to the cluster.

The second task is to join the workers to the cluster. It uses the docker_swarm module to join the workers

to the cluster with the state join. It uses the variable __output_swarm to get the join token of the cluster.

You have initialized the Docker Swarm cluster !

But, you need to create the role nfs_client in order to share your config files with the other servers of the cluster.

To do this, run the command ansible-galaxy init roles/nfs_client to create the role.

Then, we need to edit the file roles/nfs_client/tasks/main.yml with the following content :

- name: Ensure nfs-common installed

apt:

name: nfs-common

state: present

update_cache: yes

cache_valid_time: 3600

- name: ensure directories exists

file:

path: "/shared/{{ item }}"

state: directory

with_items: "{{ nfs_server_dir_data }}"

- name: Mount an NFS volume

mount:

src: "{{ groups['nfs_server'][0] }}:/shared/{{ item }}"

path: /shared/{{ item }}

opts: rw

state: mounted

fstype: nfs

with_items: "{{ nfs_server_dir_data }}"

This role will ensure that you have the package nfs-common installed on each server of the cluster.

Then, it will create the directories /shared/services and /shared/projects and it will mount the directories using the module mount.

Congratulations, you have created the Docker Swarm cluster 🍾. But let's see how to deploy a service on the cluster.

Deploy services on the Docker Swarm cluster

In this section, we will deploy two services on the Docker Swarm cluster :

- Traefik : This service will be used as a reverse proxy to expose the services that are deployed on the cluster

- Docker Swarm Visualizer : This service will be used to visualize the Docker Swarm cluster and the services that are deployed on the cluster

To deploy these services, we need to create the role tooling. To do this, run the command ansible-galaxy init roles/tooling to create the role.

Then, we need to edit the file roles/tooling/tasks/main.yml with the following content :

- name: create traefik network

docker_network:

name: traefik_net

driver: overlay

when: inventory_hostname in groups['managers']

- name: Deploy traefik service

docker_swarm_service:

name: traefik

placement:

constraints: "node.role==manager"

publish:

- { published_port: "80", target_port: "80" }

- { published_port: "443", target_port: "443" }

mounts:

- source: /var/run/docker.sock

target: /var/run/docker.sock

type: bind

read_only: yes

restart_config:

condition: any

delay: 30s

max_attempts: 5

networks:

- "traefik_net"

image: "traefik:latest"

args:

- "--log.level=INFO"

- "--api.dashboard=true"

- "--entryPoints.http.address=:80"

- "--entryPoints.api.address=:8080"

- "--entryPoints.https.address=:443"

- "--providers.docker.swarmmode"

labels:

traefik.enable: "true"

traefik.swarmmode: "true"

traefik.docker.network: "traefik_net"

traefik.http.routers.traefik-public-http.rule: "Host(`traefik.swarm`)"

traefik.http.routers.traefik-public-http.entrypoints: "http"

traefik.http.routers.traefik-public-http.service: "api@internal"

traefik.http.services.traefik-public.loadbalancer.server.port: "8080"

replicas: 1

when: inventory_hostname in groups['managers'][0]

- name: install visualizer

docker_swarm_service:

name: visualizer

image: dockersamples/visualizer

#publish:

# - { published_port: 8080, target_port: 8080}

networks:

- "traefik_net"

placement:

constraints: "node.role==manager"

mounts:

- source: /var/run/docker.sock

target: /var/run/docker.sock

type: bind

read_only: yes

restart_config:

condition: any

delay: 30s

max_attempts: 5

labels:

traefik.enable: "true"

traefik.swarmmode: "true"

traefik.docker.network: "traefik_net"

traefik.http.routers.visu-public-http.rule: "Host(`visu.swarm`)"

traefik.http.routers.visu-public-http.entrypoints: "http"

traefik.http.services.visu-public.loadbalancer.server.port: "8080"

when: inventory_hostname in groups['managers'][0]

The first task is to create the network traefik_net that will be used by the services traefik.

It should be in overlay mode because we use swarm mode.

The second task is to deploy the service traefik. It uses the module docker_swarm_service to deploy the service.

I don't want to explain all the options of the module,

but you can find the documentation here.

The third task is to deploy the service visualizer. It also uses the module docker_swarm_service to deploy the service.

You can now run the playbook playbook.yml to deploy the services on the cluster.

ansible-playbook -i 00_inventory.yml -u vagrant -k playbook.yml

Before accessing the services, you need to add the following lines in the file /etc/hosts of your computer :

192.168.56.10 traefik.swarm visu.swarm

Now, you can access to the visualizer service by using the following URLs : http://visu.swarm

Deploy a stack on the Docker Swarm cluster

In this section, we will deploy a stack on the Docker Swarm cluster. A stack is a group of services that are deployed on the cluster. The approach is different from the previous section.

First, add the following content to the playbook playbook.yml :

- name: deploy wordpress stack

hosts: managers

become: yes

roles:

- stack-app

Then, you need to create the role stack-app. To do this, run the command ansible-galaxy init roles/stack-app to create the role.

Then, we need to edit the file roles/stack-app/tasks/main.yml with the following content :

- name: install jsondiff

apt:

name: python3-jsondiff

state: present

update_cache: yes

cache_valid_time: 3600

- name: Check if stack folder exists under /shared/configs

file:

path: "/shared/configs/wordpress"

state: directory

- name: Copy stack compose file to /shared/configs

copy:

src: wordpress-stack-compose.yml

dest: /shared/configs/wordpress/wordpress-stack-compose.yml

- name: deploy stack

docker_stack:

name: wordpress

state: present

compose:

- /shared/configs/wordpress/wordpress-stack-compose.yml

It will ensure that the package python3-jsondiff is installed on the host. It is used by the module docker_stack.

Then, it will check if the folder /shared/configs/wordpress exists. If not, it will create it.

After, it will copy the file wordpress-stack-compose.yml to the folder /shared/configs/wordpress. This file contains the definition of the stack wordpress and it is located under the folder roles/stack-app/files.

Go to my github repository to see the content of this file.

Finally, it will deploy the stack wordpress using the module docker_stack.

You can now run the playbook playbook.yml to deploy the stack on the cluster.

ansible-playbook -i 00_inventory.yml -u vagrant -k playbook.yml

Before accessing the services, don't forget to add the url in the file /etc/hosts.

Wait few minutes and you can access to the wordpress service (http://wordpress.swarm).

Conclusion

In this article, we have seen how to :

- Install a NFS server and link folder to client servers

- Install Docker and create a Docker Swarm cluster

- Deploy a service on the Docker Swarm cluster

- Deploy a stack on the Docker Swarm cluster

I hope you enjoyed this article. If you have any questions, feel free to contact me by email !